Generative artificial intelligence encompasses a complex array of technologies. While possessing an in-depth understanding of the inner workings of these technologies is not mandatory for utilizing AI tools, having a fundamental grasp of key concepts can be beneficial. On this page, you will find an introduction to the concepts of Generative AI and LLM, as well as insights into their development.

Text generated by XIPU AI (GPT 4)

XIPU AI:

XIPU AI:

Generative AI is a type of artificial intelligence that can create new content from scratch, such as text, images, music, or voice. It's trained on a large amount of data in a specific domain, and then, using probabilistic models, it can generate new examples within that domain. This opens up a world of possibilities for creating original content, such as writing articles, composing music, or designing graphics. One of the best-known examples of Generative AI is GPT-3, developed by OpenAI, which can generate coherent and contextually relevant text based on a given prompt.

XIPU AI:

XIPU AI:

A Large Language Model, often abbreviated as LLM, is a type of artificial intelligence model that has been trained on a vast amount of text data. The purpose is to generate human-like text that is contextually relevant and coherent. These models try to predict the next word in a sentence or paragraph based on the understanding of the preceding words. They use the probability of word sequences to make these predictions, learning from billions or even trillions of words from the training data. A popular example of a large language model is OpenAI's GPT-3 (Generative Pretrained Transformer 3). These models have a broad range of applications, including content creation, question answering, translation, and more. However, they also pose challenges in terms of ensuring appropriate use and managing potential biases in the model's output.

XIPU AI:

XIPU AI:

Large Language Models (LLMs) are a type of Generative AI used specifically for generating text. Generative AI, broadly, refers to systems that leverage machine learning to create or generate new content that maintains the statistical properties of the training data. LLMs are trained on extensive datasets of text in a particular language. During training, these models learn the patterns, nuances, and structures associated with the language. With this acquired knowledge, LLMs can generate new, coherent, and contextually relevant text passages, sentences, or responses, mimicking the language style they were trained on. So, the relation between LLMs and Generative AI is that LLMs are a specialized instance of Generative AI designed for human language processing and text generation. They are used extensively in natural language processing tasks like machine translation, text summarization, and conversation AI.

* Text generated by XIPU AI.

Try asking these questions in XIPU AI.

Text generated by XIPU AI.

Try asking these questions in XIPU AI.

What's Generative AI?

Generative artificial intelligence is a type of artificial intelligence (AI) technology that can make content such as audio, images, text, and videos. It involves algorithms such as ChatGPT, a chatbot that can produce essays, poetry, and other content requested by a user, and Stable Diffusion, which generates images and art. Generative AI is a subtype of machine learning and deep learning, which involves using data and algorithms to imitate how humans learn and become more accurate. While machine learning can perceive and sort data, generative AI can take the next step and create something based on the information it has. However, it remains expensive, and only a few well-financed companies, including OpenAI, DeepMind, Meta and Baidu, have built generative AI models.

The relationship between AI, machine learning, deep learning, and Generative AI is depicted in the following diagram.

The key features of Generative AI include

- training on a large scale of data

- models with a large number of parameters (typically in the range of several billion)

- generating new content based on prompts

- Being the state-of-the-art in artificial intelligence

As a representative tool in generative AI, ChatGPT became the most visited generative AI tool worldwide. The field of generative artificial intelligence has grown rapidly, starting with Large Language Models (LLMs) and progressively branching out into other areas.

For further reading of Generative AI and additional academic resources, please refer to Discover.

Large Language Model (LLM)

Large language model (LLM) is crucial for generative artificial intelligence, serving as the foundation for AI applications in this field. For instance, ChatGPT can utilize various LLMs including GPT3.5 and GPT4.

A large language model (LLM) is a language model notable for its ability to achieve general-purpose language generation and other natural language processing tasks such as classification. LLMs acquire these abilities by learning statistical relationships from text documents during a computationally intensive self-supervised and semi-supervised training process. LLMs can be used for text generation, a form of generative AI, by taking an input text and repeatedly predicting the next token or word. LLMs are artificial neural networks. The largest and most capable, as of March 2024, are built with a decoder-only transformer-based architecture while some recent implementations are based on other architectures, such as recurrent neural network variants and Mamba (a state space model). (Wikipedia, 2024)

Generative AI and LLMs are often discussed together.

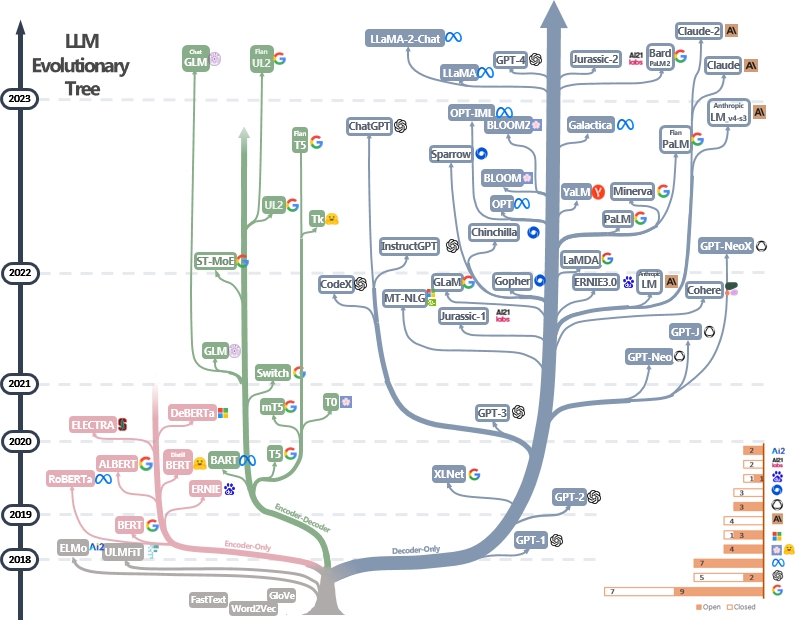

Above is the evolutionary tree of modern LLMs traceing the development of language models in recent years and highlights some of the most well-known models, including LLaMA and GPT-series models. Models on the same branch have closer relationships. For further reading, please refer to

In 2023, generative AI reached a significant milestone with the emergence of numerous new tools and applications. These systems have proven invaluable across diverse industries and global business sectors. Explore the timeline showcasing significant advancements in generative AI and large language models (LLMs) from the inception of ChatGPT through the following links.